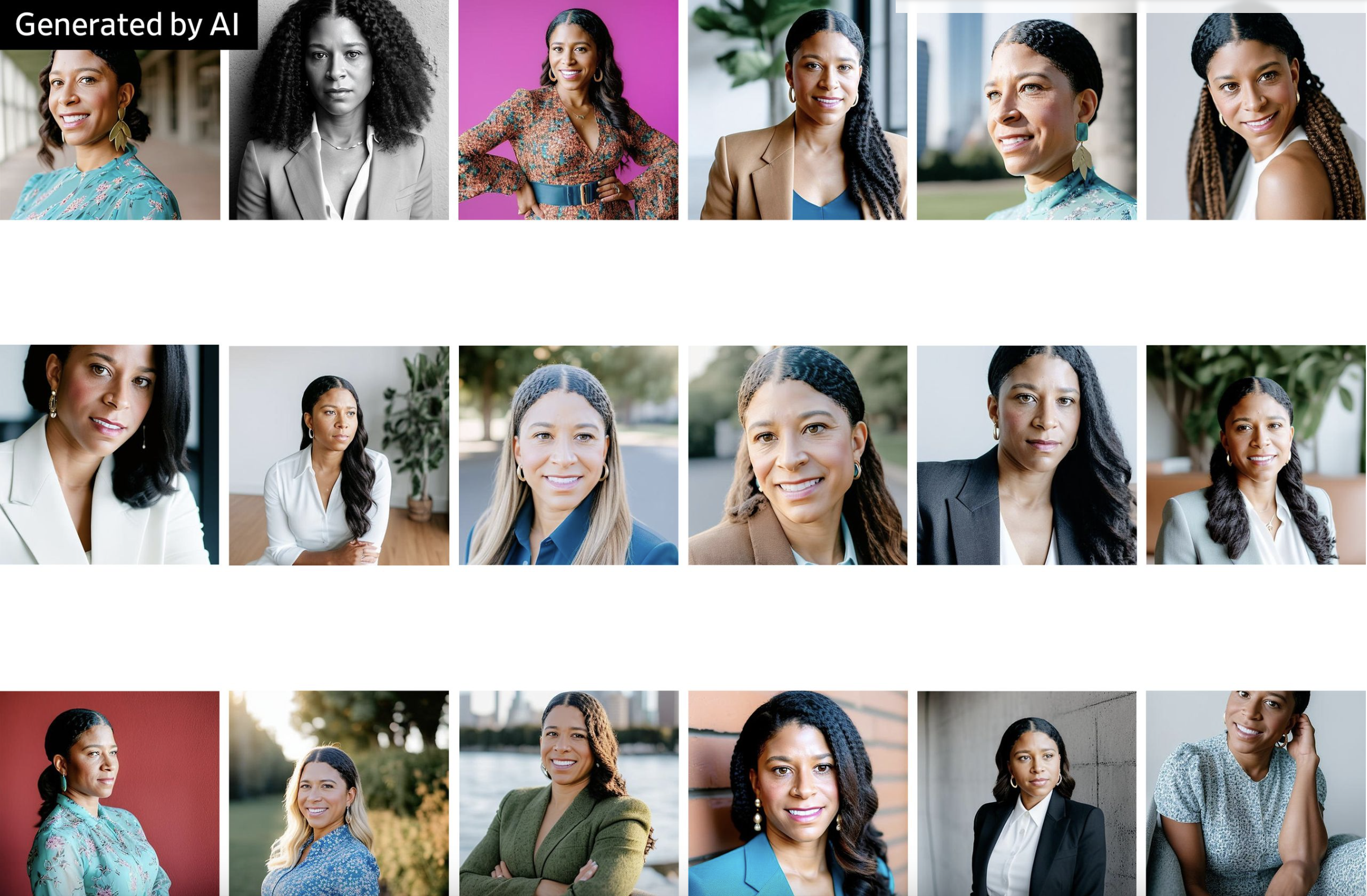

AI-Generated Headshots: Unintended Bias and Challenges for Women of Color

In the rapidly evolving landscape of professional image creation, the utilization of artificial intelligence (AI) to generate headshots has gained popularity among young workers seeking to enhance their digital profiles. However, WSJ has reported on the emergence of a concerning trend: women of color are voicing their dissatisfaction with AI-generated headshots, citing issues such as altered skin tones, distorted hairstyles, and changes in facial features.

This trend has raised important concerns for public relations (PR) executive leadership, urging them to recognize and address the implications of this technology on inclusivity, diversity, and the public image of both individuals and companies.

The allure of AI-generated headshots lies in their cost-effectiveness and ease of use. For a fraction of the price of a professional photoshoot, individuals can upload their own photos to various AI platforms, which then employ complex models to create polished images suitable for professional contexts. However, the technology has stumbled when it comes to accurately representing the diversity of users.

Many women of color report that these AI misfires go beyond mere technological glitches. Instead, they highlight biases ingrained in the base technology itself. These biases stem from the datasets used for training, which often perpetuate racial and gender stereotypes. For instance, platforms may rely heavily on photos of men for certain professions, skewing the results when generating images for women. This inherent bias not only affects individuals but also reflects poorly on companies offering such AI services.

The implications for PR executive leadership are profound. In an era where companies are striving to foster inclusivity and equity, AI-generated headshots that misrepresent or disregard racial and gender identities can lead to public relations crises. Negative perceptions can arise, damaging a company's reputation and its relationships with diverse customer bases and stakeholders.

Experts in the field emphasize the importance of curating training data and regularly updating AI models to mitigate bias. This proactive approach aligns with the principles of responsible AI development, ensuring that technology benefits everyone without perpetuating discriminatory patterns. It underscores the need for PR executive leadership to hold AI providers accountable and encourage transparency in how AI models are trained and refined.

Several AI companies are already taking steps to rectify these issues. Secta Labs, for instance, has released an updated version of its technology that allows users to specify their race, resulting in more accurate and culturally sensitive headshots. Stability AI, a key player in providing base models for various AI services, is also working to address biases in its models and improve the accuracy of images for individuals of all racial and ethnic backgrounds.

The experiences of women like Nicole Harris, who had a bindi added to her AI-generated headshot despite not being Hindu, shed light on the importance of understanding and respecting cultural nuances. For PR executive leadership, these examples underscore the need for AI service providers to prioritize thorough training data curation and ongoing model refinement. This will ensure that the technology enhances individuals' professional presence without inadvertently causing offense or perpetuating stereotypes.

In conclusion, the rising trend of AI-generated headshots, combined with the challenges faced by women of color, demands the attention of PR executive leadership. It highlights the critical importance of responsible AI development, data curation, and ongoing refinement to eliminate bias and inaccuracies. By addressing these concerns, PR leaders can ensure that AI technologies align with their organizations' commitment to diversity, equity, and authentic representation, ultimately bolstering their public image and strengthening relationships with stakeholders.